Introduction: Data quality is no longer an afterthought

“There is no AI strategy without a data strategy.” This statement from Snowflake CEO Sridhar Ramaswamy wasn’t just a soundbite, it was the central theme surrounding Snowflake Summit 2025. From Cortex AI SQL to Openflow and the Postgres acquisition, one principle became clear: the future of AI and enterprise applications is grounded in the quality, reliability, and observability of data.

In this article, we look at some key product announcements that stood out from the Snowflake Summit 2025.

1. Easy, Connected, Trusted: Snowflake’s AI Data Cloud in three words

Snowflake Co-founder and Head of Product Benoit Dageville opened the Summit by outlining how AI is becoming embedded across all domains and functions within the enterprise. He emphasized Snowflake’s transformation into a unified platform for intelligent data operations and distilled the company’s AI vision into three foundational principles: easy, connected, and trusted.

- Easy: AI development should be frictionless. A unified data platform must reduce complexity so teams can build and deploy faster.

- Connected: AI systems can’t operate in silos. Data and applications must move freely across organizational boundaries.

- Trusted: Governance isn’t an afterthought. Trust must be built into the platform through end-to-end visibility, control, and accountability.

This framework wasn’t just theoretical but laid the groundwork for many of the following product announcements.

2. Open table formats are now first-class citizens

One of the clearest trends from the Summit was Snowflake’s deeper alignment with the open data ecosystem, especially Apache Iceberg. With support for native Iceberg tables and federated catalog access, Snowflake positioned itself as a format-agnostic, interoperable layer, regardless of whether your architecture follows a lakehouse, data mesh, traditional warehouse, or hybrid model.

This move underscores the growing need to unlock data access and analysis across open and managed environments, enabling teams to build, scale, and share advanced insights and AI-powered applications faster. Snowflake’s commitment to open interoperability was also reflected in its expanded contributions to the open-source ecosystem, including Apache Iceberg, Apache NiFi, Modin, Streamlit, and the incubation of Apache Polaris.

“We want to enable you to choose a data architecture that evolves with your business needs,” said Christian Kleinerman, EVP of Product at Snowflake. “

To support this vision in practice, Snowflake also announced deeper interoperability with external Iceberg catalogs such as AWS Glue and Hive Metastore, allowing teams to query data where it lives without moving it.

Enhanced compatibility with Unity Catalog further reflects a broader trend: governance and lineage must now extend across formats, clouds, and tooling ecosystems, not just within a single vendor stack. These updates position Snowflake not only as a data platform but as a flexible control plane for AI-ready architectures—one where open data, external catalogs, and trusted analytics can operate in sync.

3. Eliminating silos with Openflow’s unified and autonomous ingestion

Snowflake OpenFlow marks a significant step in simplifying data ingestion across structured and unstructured sources. Built on Apache NiFi, it offers an open, extensible interface that supports batch, stream, and multimodal pipelines within a unified framework.

Users can choose between a Snowflake-managed deployment or a bring-your-own-cloud setup, offering flexibility for hybrid and decentralized teams. Crucially, OpenFlow applies the same engineering and governance standards to unstructured data pipelines as it does to structured ones, enabling teams to build reliable data products regardless of source format.

During the keynote, EVP of Product Christian Kleinerman also previewed Snowpipe Streaming, a high-throughput ingestion engine (up to 10GB/s) with multi-client support and immediate data query ability.

Together, these advancements aim to eliminate siloed ingestion workflows and reduce operational friction without compromising reliability at the point.

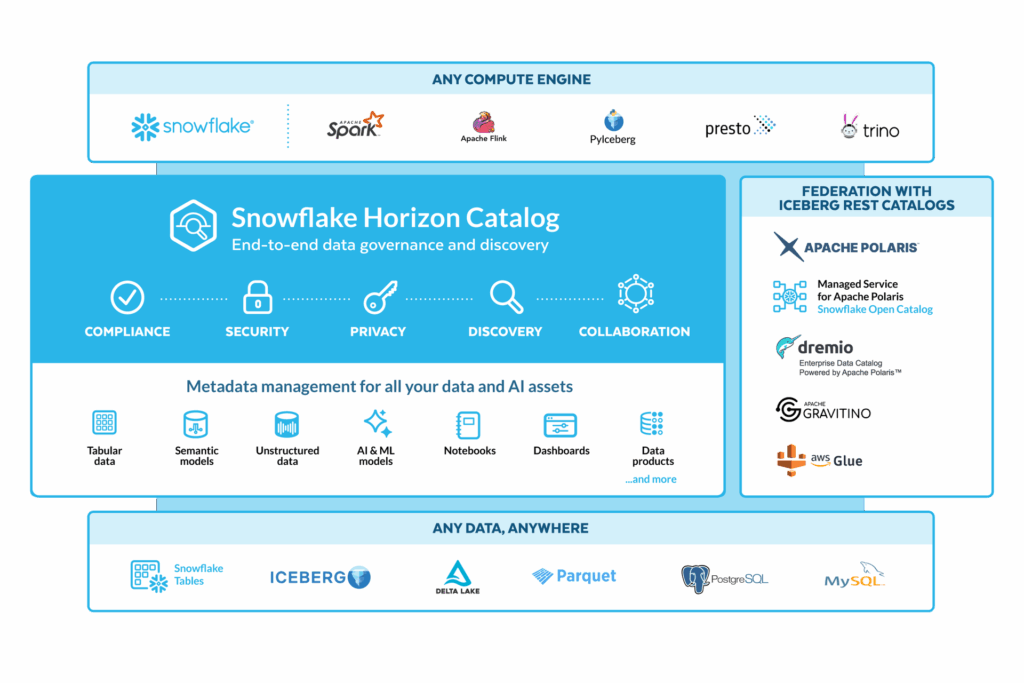

4. Metadata governance for the AI era: Horizon Catalog and Copilots

Snowflake unveiled Horizon Catalog, a federated catalog designed to unify metadata across diverse sources, including Iceberg tables, dbt models, and BI tools like Power BI. This consolidated view provides both lineage and context across structured and semi-structured datasets, which is critical for organizations embracing decentralized data ownership models or a data mesh architecture.

In addition, the new Horizon Copilot brings natural language search, usage analysis, and lineage insights to the forefront, making it easier for teams to discover, understand, and validate data across their stack.

As enterprises shift to more decentralized models of data ownership, this level of federated visibility and governance becomes essential to ensuring reliability at scale, mainly when data flows across pipelines, clouds, and tools.

5. Semantic views and context-aware AI Signals

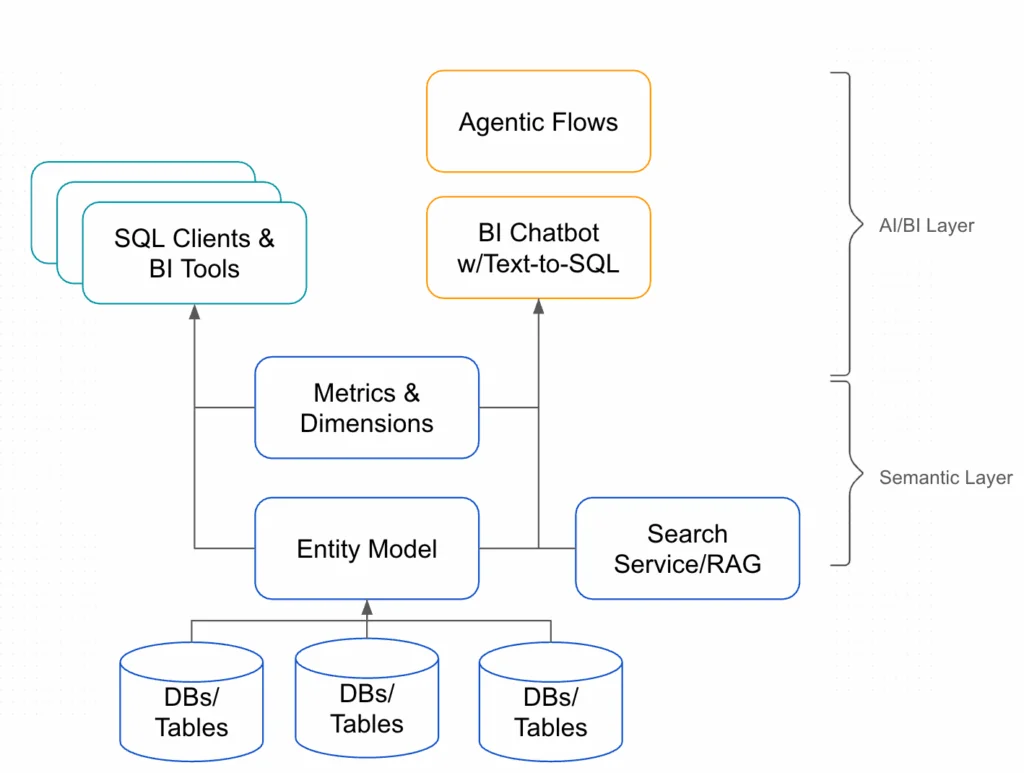

Snowflake’s introduction of Semantic Views and Cortex Knowledge Extensions marks a strategic shift toward embedding domain logic directly into the data platform. Semantic Views provide a standardized layer for business logic, enabling consistent metrics, definitions, and calculations across tools. This is especially critical when powering AI models that rely on aligned semantics for trustworthy insights.

Cortex Knowledge Extensions allow teams to inject metadata, rules, and domain-specific guidance into their LLMs and copilots, improving accuracy and reducing hallucinations. For data teams building AI-native pipelines, this means more context-aware signal processing, less noise in anomaly detection, and alerts that reflect business impact.

6. Accelerating AI and DataOps without compromising trust

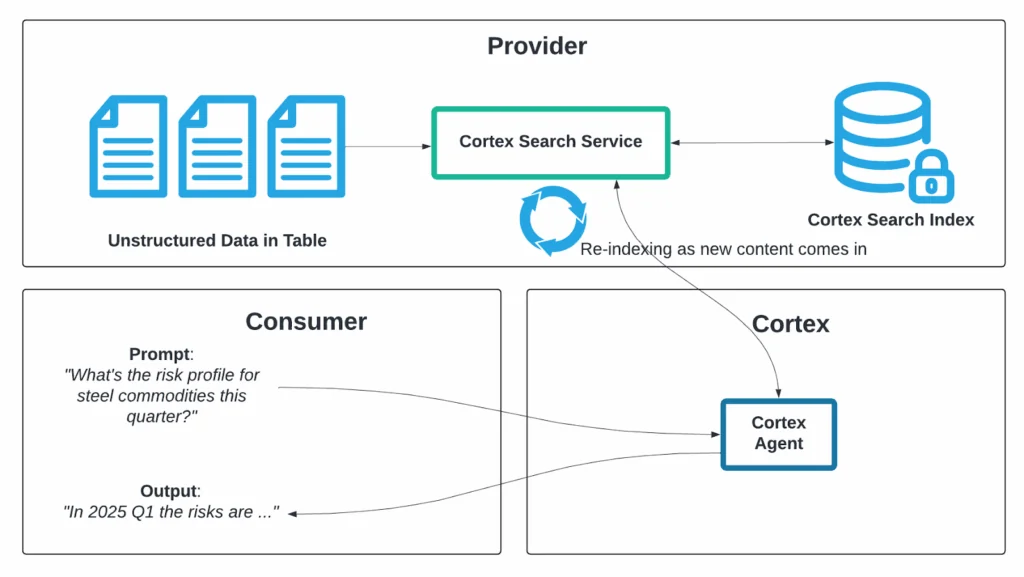

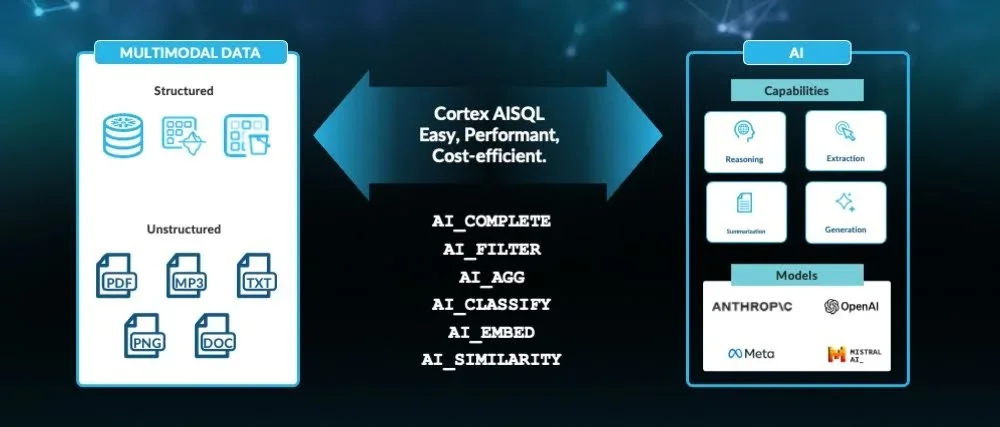

Snowflake doubled down on operationalizing AI across the enterprise with product updates aimed at trust, speed, and precision. Cortex AI SQL brings LLM capabilities to familiar SQL workflows, allowing users to build natural language-driven queries while maintaining governance. Paired with Snowflake Intelligence and Document AI, these tools reflect a growing push toward embedded agents and copilots that enhance productivity without compromising oversight.

These updates underscore a broader trend: enabling faster AI development cycles while preserving the reliability, auditability, and explainability of decisions made downstream. For data teams, this means aligning DataOps with MLOps and building safeguards that scale with velocity.

Final Thoughts: What This Means for Data Teams

The Snowflake Summit 2025 goes far beyond feature releases and reflects a more profound shift in the design of enterprise data architectures and their governance strategies:

- Open formats like Apache Iceberg, Delta Lake, etc, are not just supported, they’re foundational to modern, flexible architectures.

- Ingestion at scale is now coupled with expectations of real-time validation and trust at the entry point.

- Governance is moving from static policies to intelligent automation and embedded lineage.

- AI precision demands semantic alignment and metadata context from the start.

From Horizon Catalog to Cortex AI SQL and OpenFlow, Snowflake is designing for a world where AI-powered insights must be fast and dependable. For data teams, this means architecting systems where reliability, explainability, and agility are not trade-offs but baseline requirements.

As Snowflake doubles down on support for open formats and distributed pipelines, AI-powered data observability tools like Telmai ensure that your data quality scales with your architecture. Whether you’re onboarding Iceberg tables, streaming data through OpenFlow, or aligning KPIs via semantic layers, Telmai integrates natively into your existing data architecture to proactively monitor your data for inconsistencies and validate every record before it impacts AI and analytics outcomes.

Are you looking to make your Snowflake pipelines AI-ready? Click here to talk to our team of experts to learn how Telmai can accelerate access to trusted and reliable data.

Passionate about data quality? Get expert insights and guides delivered straight to your inbox – click here to subscribe to our newsletter now.