Scaling data reliability for lakehouses built on open table formats

Learn how enterprises are scaling data reliability across data lakehouses built on Iceberg, Delta Lake, and Hudi with native data observability and data quality frameworks.

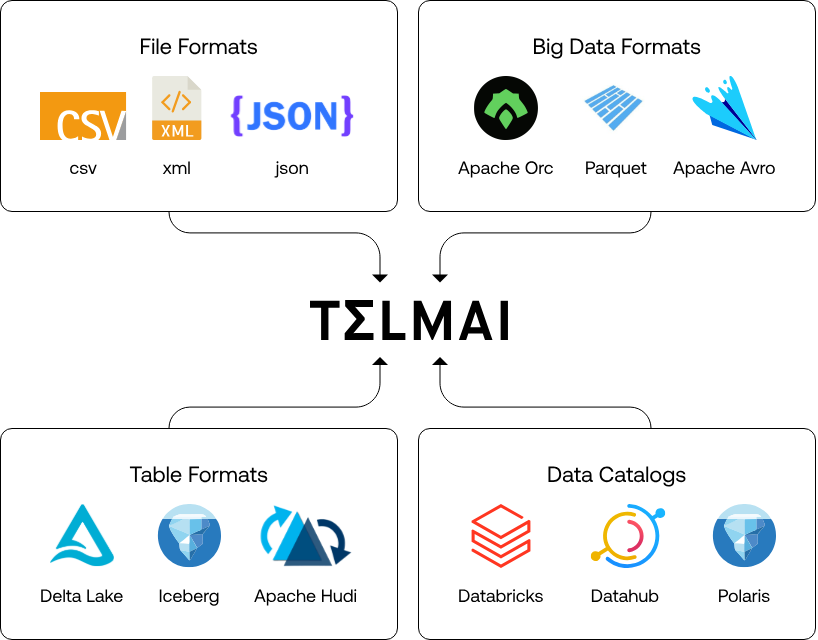

The rapid expansion of data sources, formats, and real-time analytics needs is forcing organizations to rethink how their data architectures are designed. Data teams are adopting modular lakehouse architectures powered by open table formats such as Apache Iceberg, Delta Lake, and Apache Hudi. These modern architectures combine the scalability of data lakes with the reliability and performance of warehouses, while supporting seamless integration across a growing ecosystem of tools and platforms.

However, as these environments become more distributed and dynamic, ensuring data reliability across structure, volume, and velocity becomes an increasingly complex challenge.

In this article, we’ll explore why enterprises are opting for open table formats, the unique data quality hurdles they face, and how organizations can rethink data quality for this new era, drawing on real-world lessons from enterprise adopters and practical strategies for building scalable reliability frameworks native to open table formats.

Why are enterprises opting for open table formats?

Traditional data warehouses have long been the backbone of structured, SQL-based analytics. While they offer robust performance, they often have limitations in flexibility and scalability. Handling semi-structured or unstructured data at scale can be challenging, and integrating new technologies or migrating workloads frequently involves costly, manual transformations, leading to vendor lock-in.

To address scalability and cost concerns, many organizations have transitioned to data lakes built on object storage solutions like Amazon S3, Azure Blob Storage, or Google Cloud Storage. These environments facilitate the storage of vast amounts of raw data in formats such as JSON, Parquet, or Avro. However, data lakes inherently lack features like ACID transactions, efficient schema enforcement, and optimized query performance, making them difficult to govern and operationalize at scale.

This growing complexity has driven the need for standardized, queryable data formats that can work consistently across tools, teams, and cloud environments. As Pankaj Yawale, Senior Principal Data Architect at ZoomInfo, puts it, “Ensuring that data is stored in a standardized format and structure is critical not only for functional use cases—such as efficient querying, analysis, and consumption—but also for non-functional requirements like governance, reliability, and data quality.”

Industry leaders are increasingly aligning with this view. As Kai Waehner, Global Field CTO at Confluent, notes, “An open table format framework like Apache Iceberg is essential in the enterprise architecture to ensure reliable data management and sharing, seamless schema evolution, efficient handling of large-scale datasets and cost-efficient storage while providing strong support for ACID transactions and time travel queries.” This vision of interoperable, modular data infrastructure has propelled the rise of open table formats like Apache Iceberg, Delta Lake, and Apache Hudi. These formats introduce a powerful metadata layer over object storage, effectively bringing warehouse-like capabilities to data lakes.. This layer brings database-like functionalities, including:

- ACID Transactions: Ensuring reliable and consistent data operations.

- Schema Evolution: Allowing seamless modifications to data structures without disrupting existing queries.

- Time Travel: Enabling access to historical versions of data for auditing and rollback purposes.

- Efficient Querying: Improving performance through features like data skipping and partition pruning.

By decoupling storage from compute, open table formats allow engines like Spark, Trino, Flink, and Presto to operate directly on object storage, eliminating the need for data duplication and enabling scalable analytics and AI workloads.

For instance, Apache Iceberg offers a SQL-first approach with multi-engine compatibility, making it suitable for complex analytical workloads. Its design facilitates direct querying from various engines and tools, streamlining data access and reducing latency.

As organizations increasingly adopt AI and machine learning, the ability to manage data versioning becomes crucial. Open table formats support maintaining multiple versions of datasets, aiding in reproducibility and model training processes.

Yet even with these capabilities, organizations still face a critical blind spot: visibility into data quality and pipeline health.

Why is data reliability still a challenge?

Open table formats like Apache Iceberg have dramatically advanced how organizations manage data layout, schema evolution, and multi-engine access, but they do not inherently solve for data reliability and observability.

Open table formats handle data layout and evolution but not Observability

While formats like Iceberg bring structure and governance to raw object storage, they don’t provide visibility into the actual health, accuracy, or consistency of data. ACID transactions and versioned metadata ensure that data can be read and written safely, but they don’t answer questions like:

- Has the incoming data drifted from expectations?

- Is the schema still aligned with downstream consumers?

- Are there anomalies in the data that could compromise analytics or AI models?

As Pankaj Yawale, Senior Principal Data Architect at ZoomInfo, observed, “Iceberg’s built-in support for upserts, deletes, schema evolution, and time travel addressed our operational pain points directly. But ensuring ongoing data quality and lineage still required additional frameworks and validation at every stage.”

Warehouse-centric DQ tools don’t scale to open lakehouses

Most existing data quality frameworks were designed for traditional warehouse environments, with rigid schemas, centralized processing, and predictable ingestion. In contrast, modern lakehouses ingest semi-structured, evolving data from hundreds of sources, often at petabyte scale and across distributed, multi-cloud environments.

This shift exposes several architectural mismatches:

- Scalability gaps: Legacy DQ tools often can’t process large, continuously updating datasets efficiently, mainly when validation is run on top of compute-heavy query engines

- Lack of flexibility: These tools are not designed to handle schema drift, nested structures, or the heterogeneity of open table formats

- Integration with distributed engines: Distributed stacks rely on engines like Spark, Trino, and Flink—all querying the same Iceberg tables from different contexts. Most DQ tools aren’t designed to monitor across these engines or handle compute/storage decoupling

- Awareness of metadata and partitioning: Many tools ignore Iceberg’s rich metadata, missing opportunities for partition-aware, efficient, and context-sensitive validation.

The result is often a proliferation of custom scripts, ad hoc validation jobs, and manual monitoring solutions that are brittle, hard to scale, and expensive to maintain.

The need for scalable data quality frameworks native to open table formats

To address the unique reliability challenges of modern lakehouse environments, organizations are rethinking their approach to data quality. They need data quality and observability frameworks purpose-built for open table formats, designed not just to monitor data but to operate seamlessly alongside it without introducing architectural bottlenecks or cost overhead.

Rethinking the architecture: Data Quality as an adjacent layer

Data reliability frameworks are shifting away from monolithic, warehouse-centric models. They operate as adjacent compute layers, running parallel to the core data processing engines. This decoupled design offers several advantages:

- High Throughput & Scalability: Run massively parallel validation jobs across billions of records, unconstrained by the query limitations of Spark or Trino

- Optimized Performance & Lower Cost: Offload data quality checks from production systems. Ephemeral compute can be spun up only when needed, avoiding persistent overhead

- Enhanced Security & Governance: Operate directly on data in object storage—eliminating the need for data movement and supporting zero-trust architectures with read-only access

Native support for open formats and cloud storage

For a data quality framework to function effectively in modern lakehouse environments, it must integrate natively with the core components of that architecture, including open formats and cloud-native object storage.

- Format Awareness: First-class support for open formats like Iceberg, Parquet, Avro, and JSON ensures validation logic understands nested schemas, partitions, and metadata

- Cloud-Native Storage Compatibility: Direct compatibility with AWS S3, Azure Data Lake, and Google Cloud Storage allows frameworks to monitor data in place

- Cross-System Validation: The ability to reconcile and compare datasets across multiple domains, systems, and ingestion paths is essential for organizations managing complex, federated pipelines

Intelligent and embedded data quality across the lifecycle

Traditional rule-based systems often fall short in modern lakehouse environments, where data is semi-structured, dynamic, and continuously evolving. To keep up with this scale and complexity, leading data quality frameworks now embed machine learning to automate, adapt, and scale validation efforts across the entire data lifecycle.

Using ML, these frameworks can proactively detect anomalies—whether it’s schema drift, shifts in value distributions, or sudden spikes in volume—before issues impact downstream pipelines or AI models. More advanced systems go a step further by recommending or auto-tuning quality rules based on historical patterns, user behavior, and past incident data. This reduces the manual burden on data teams and accelerates time-to-value.

But intelligence alone isn’t enough. To be truly effective, data quality must be embedded at every stage of the data journey:

- Pre-Ingestion Checks:Identify malformed files, missing fields, or format mismatches before data even lands in your Iceberg tables.

- Batch and Streaming Validation:Apply consistent rules across batch pipelines, micro-batches, and real-time streams, ensuring quality regardless of ingestion method.

- Real-Time Observability:Continuously monitor data health, lineage, and statistical drift to catch issues early and enable rapid triage and resolution.

By combining ML-driven intelligence with lifecycle-wide observability, modern frameworks empower data teams to move from reactive firefighting to proactive assurance, ensuring every data product built on Iceberg-native architectures is both trusted and resilient.

As Pankaj Yawale from ZoomInfo explains, Iceberg has helped address operational overhead, but observability still requires deeper integration:

“With Iceberg, we can update records at scale and maintain multiple versions of data using snapshots—all without maintaining extensive custom code or managing redundant copies of the data. This has greatly improved our data agility, reduced operational overhead, and significantly improved our ability to manage data lineage and quality over time.”

Conclusion: Embedding trust into every layer of the Lakehouse

As organizations scale across clouds, domains, and engines, the traditional approach of retroactive data cleansing is no longer viable. Instead, data teams must embed reliability into the fabric of their pipelines, combining native format support, lifecycle-wide observability, and ML-driven intelligence.

At Telmai, we’ve worked with some of the large-scale data-driven enterprises to help operationalize this vision. By providing observability and validation workflows architected specifically for Iceberg-native lakehouses, Telmai enables data teams to shift from reactive firefighting to proactive assurance, without sacrificing agility or performance.

For a deeper dive into making your AI initiatives data-ready from day one, download the Data Quality for AI whitepaper. It outlines clear, actionable steps to identify, address, and prevent data quality issues before they disrupt your models or decision-making.

Passionate about data quality? Get expert insights and guides delivered straight to your inbox – click here to subscribe to our newsletter now.

- On this page

See what’s possible with Telmai

Request a demo to see the full power of Telmai’s data observability tool for yourself.